Dual Virtual Radial Fitts' Task (dual-VRFT) |

||

|---|---|---|

| Type: | Mental Chronometry |  |

| Dimension(s): | Timing | |

| Repository: | https://github.com/carlacz/dual-VRFT | |

| Citation: | Czilczer et al., (in preparation) | |

|

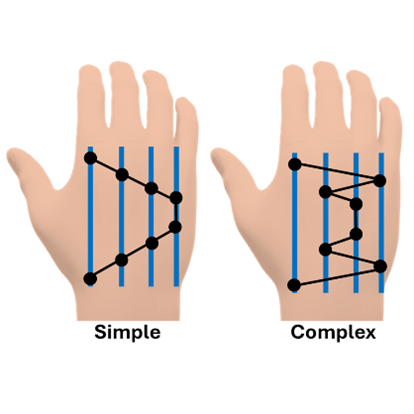

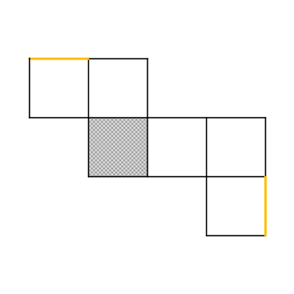

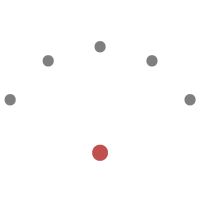

The Dual Virtual Radial Fitts’ Task (dual-VRFT) is an adaptation of the Virtual Radial Fitts’ Task (VRFT; e.g., Caeyenberghs et al., 2009), based on Fitts’ law (Fitts, 1954). Participants tap and imagine tapping between a central circle and five radially arranged targets in a specified order. Movement difficulty varies across trials by manipulating target width. To ensure identical measurement of movement times in execution and imagery, participants press the space bar with their non-dominant hand for each executed and imagined tap. In the dual-VRFT, Fitts’ law applies to both execution and imagery, with imagery being slower than execution (Czilczer et al., in preparation). The dual-VRFT assesses timing-related movement imagery ability by capturing how accurately the difficulty of a movement is reflected in imagery. The current version (Czilczer et al., in preparation) includes one block each for execution and imagery, with five difficulties presented across trials. A familiarization with tapping targets of varying sizes, a comprehension check, and practice trials precede the main task. The task requires a stylus with a fine tip. While a touchscreen is recommended to ensure a stable tapping surface, it is not strictly required. |

||